Berkay Guler

Computer Science PhD Student within Networked Systems Program

Center of Pervasive Communications and Computing (CPCC)

University of California, Irvine

As a current PhD student focusing on machine learning for wireless communications, and with prior experience as a machine learning engineer/researcher, I have gained exposure to various aspects of machine learning from both application and research perspectives. My current research explores channel understanding and representation learning for next generation wireless systems across various tasks such as beam management, CSI compression/feedback, localization, channel prediction, and more.

I am fortunate to be advised by Prof. Hamid Jafarkhani; his guidance and example motivate my work alongside our talented research team.

Beyond technical pursuits, I actively engage in volunteering, mentoring, and networking activities to develop my interpersonal skills. I enjoy connecting with individuals from diverse backgrounds and am always open to forging new connections and collaborations.

Get in Touch

Feel free to reach out! I welcome conversations with anyone interested in ML/AI/Wireless, or those simply looking to connect and exchange ideas :)

Updates

Best Paper Runner-Up at NeurIPS 2025 Workshop

AwardOur paper earned the Best Paper Runner-Up Award (sponsored by Qualcomm) at the NeurIPS 2025 Workshop on AI and ML for Next-Generation Wireless Communications and Networking. The work introduces a noisy masked contrastive objective to learn robust, task-agnostic CSI representations that perform strongly across 5G/6G tasks without task-specific adaptation.

Read the paperDecember 2025

Paper Accepted at NeurIPS 2025

AcceptedOur work titled "Robust Channel Representation for Wireless: A Multi-Task Masked Contrastive Approach" has been accepted for an oral presentation at NeurIPS 2025 (The Annual Conference on Neural Information Processing Systems) AI4NextG workshop.

October 2025

Paper Submitted to IEEE JSAC

Under Revision ReviewOur paper "A Multi-Task Foundation Model for Wireless Channel Representation Using Contrastive and Masked Autoencoder Learning" has been submitted to IEEE JSAC (Journal of Selected Areas in Communications) special issue on "Large AI Models for Future Wireless Communication Systems". The paper is currently under revision review.

May 2025

Paper Accepted at ICC 2025

AcceptedOur paper "AdaFortiTran: An Adaptive Transformer Model for Robust OFDM Channel Estimation" has been accepted for an oral presentation at ICC 2025.

February 2025

Research Papers

Robust Channel Representation for Wireless: A Multi-Task Masked Contrastive Approach

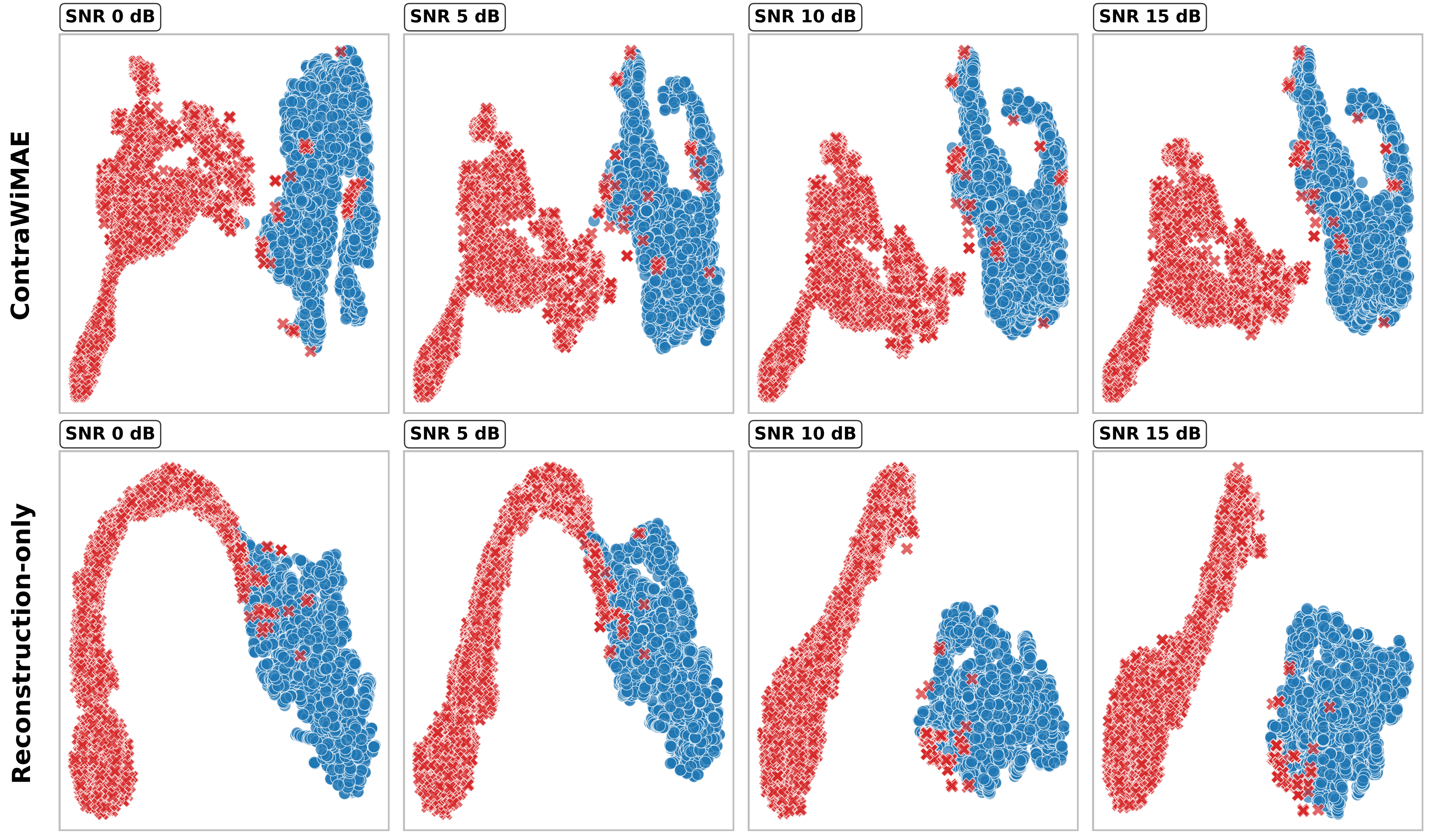

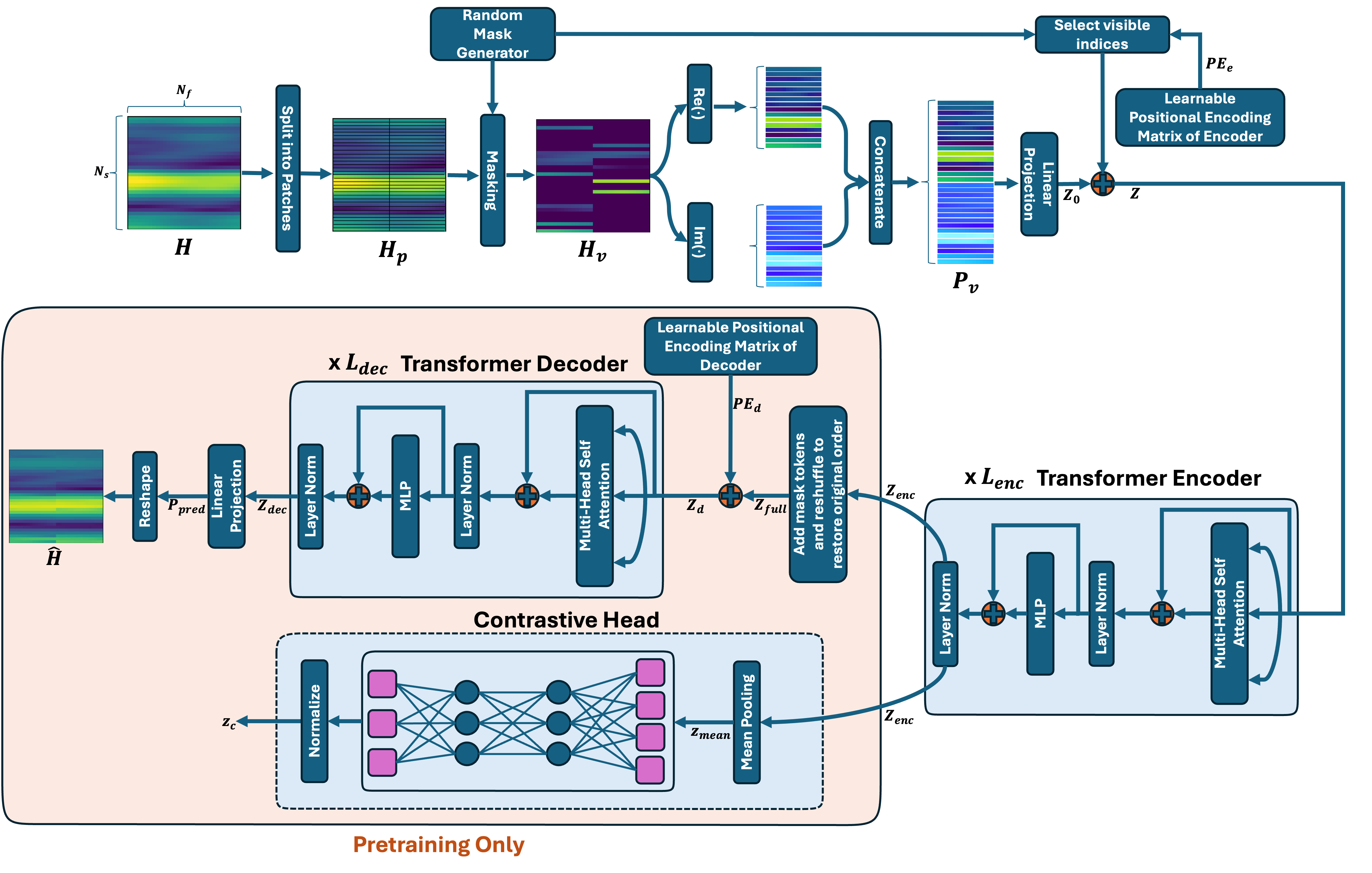

Wireless environments present unique challenges for machine learning (ML) due to partial observability, multi-domain characteristics, and dynamic nature. To address these challenges, we propose ContraWiMAE (Wireless Masked Contrastive Autoencoder), a multi-task learning framework that learns robust representations from incomplete wireless channel observations avoiding expensive augmentation engineering. Our approach combines masked autoencoder learning with a novel masked contrastive objective that contrasts differently masked versions of the same channel, leveraging the inherent wireless complexity as natural augmentation. We employ a curriculum learning strategy systematically developing representations that maintain structural properties while enhancing discriminative capabilities. The framework enables learning task-specific invariances, which we demonstrate through noise invariance and improved linear separability tested on channel estimation and cross-frequency beam selection in unseen environments. Our experiments demonstrate superior representation stability under severe noise conditions with performance close to supervised training.

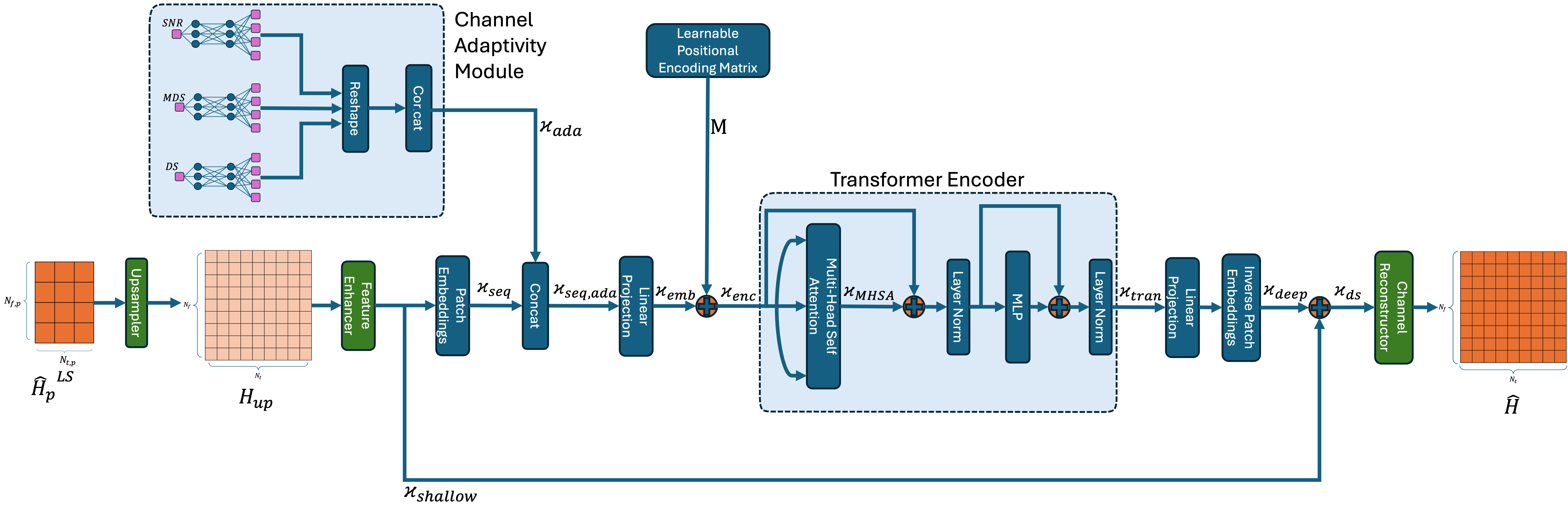

AdaFortiTran: An Adaptive Transformer Model for Robust OFDM Channel Estimation

Deep learning models for channel estimation in Orthogonal Frequency Division Multiplexing (OFDM) systems often suffer from performance degradation under fast-fading channels and low-SNR scenarios. To address these limitations, we introduce the Adaptive Fortified Transformer (AdaFortiTran), a novel model specifically designed to enhance channel estimation in challenging environments. Our approach employs convolutional layers that exploit locality bias to capture strong correlations between neighboring channel elements, combined with a transformer encoder that applies the global Attention mechanism to channel patches. This approach effectively models both long-range dependencies and spectro-temporal interactions within single OFDM frames. We further augment the model's adaptability by integrating nonlinear representations of available channel statistics SNR, delay spread, and Doppler shift as priors. A residual connection is employed to merge global features from the transformer with local features from early convolutional processing, followed by final convolutional layers to refine the hierarchical channel representation. Despite its compact architecture, AdaFortiTran achieves up to 6 dB reduction in mean squared error (MSE) compared to state-of-the-art models. Tested across a wide range of Doppler shifts (200-1000 Hz), SNRs (0 to 25 dB), and delay spreads (50-300 ns), it demonstrates superior robustness in high-mobility environments.

A Multi-Task Foundation Model for Wireless Channel Representation Using Contrastive and Masked Autoencoder Learning

Current applications of self-supervised learning to wireless channel representation often borrow paradigms developed for text and image processing, without fully addressing the unique characteristics and constraints of wireless communications. Aiming to fill this gap, we first propose WiMAE (Wireless Masked Autoencoder), a transformer-based encoder-decoder foundation model pretrained on a realistic open-source multi-antenna wireless channel dataset. Building upon this foundation, we develop ContraWiMAE, which enhances WiMAE by incorporating a contrastive learning objective alongside the reconstruction task in a unified multi-task framework. By warm-starting from pretrained WiMAE weights and generating positive pairs via noise injection, the contrastive component enables the model to capture both structural and discriminative features, enhancing representation quality beyond what reconstruction alone can achieve. Through extensive evaluation on unseen scenarios, we demonstrate the effectiveness of both approaches across multiple downstream tasks, with ContraWiMAE showing further improvements in linear separability and adaptability in diverse wireless environments. Comparative evaluations against a state-of-the-art wireless channel foundation model confirm the superior performance and data efficiency of our models, highlighting their potential as powerful baselines for future research in self-supervised wireless channel representation learning.

Lectures

Understanding Attention: From Signals to Learning

An introductory exploration of attention mechanisms in machine learning, covering the evolution from signal processing concepts to modern variants. This lecture provides comprehensive insights into how/why attention works as well as into its limitations.